Tag: osama oracle

New Features In 11g / ADRCI

What is It ?

diagnostic data such as traces, dumps, the alert log, health monitor reports, and more. It has a unified directory structure across multiple instances and multiple products. Beginning with Release 11g, the database, Automatic Storage Management (ASM), and other Oracle products or components store all diagnostic data in the ADR. Each instance of each product stores diagnostic data underneath its own ADR home directory. The ADR’s unified directory structure enables customers and Oracle Support to correlate and analyze diagnostic data across multiple instances and multiple products.

Contain :

adrci> show problem

adrci> show incident

adrci> show incident -mode detail -p “incident_id=”incident_id””

adrci> show trace “trace file name

adrci> ips create package problem 1 correlate all

adrci> ips generate package 1 in “/home/oracle”

adrci> show tracefile -rt

adrci> show control

SHORTP_POLICY :Retention for ordinary trace files

LONGP_POLICY :Retention for like incident files

adrci> set control (SHORTP_POLICY = 360) ===>15days

adrci> set control (LONGP_POLICY = 2160) ===>90 Days

adrci> show control

Following command will manually purge all tracefiles older than 2 days (2880 minutes):

adrci> purge -age 2880 -type trace

adrci> purge -age 129600 -type ALERT ===> purging ALERT older than 90 days

adrci> purge -age 43200 -type INCIDENT ===> purging INCIDENT older than 30 days

adrci> purge -age 43200 -type TRACE ===> purging TRACE older than 30 days

adrci> purge -age 43200 -type CDUMP ===> purging CDUMP older than 30 days

adrci> purge -age 43200 -type HM ===> purging HM older than 30 days

adrci> show tracefile -rt

1-ADRCI Tips

buffer cache and Shared Pool

The buffer cache is part of the SGA. It holds copies of data blocks so as they can be accessed quicker by oracle than by reading them off disk.

alter system flush buffer_cache.

Shared Pool

- Optimized query plans

- Security checks

- Parsed SQL statements

- Packages

- Object informatio.

Shared Pool Latch :

used when memory is allocated or freed in the shared pool.

Library Cache latch :

this latch protects operations within the library cache.

Flush Shared Pool

alter system flush shared_pool

Allocation in memory

Memory in the shared pool is managed and freed in a LRU fashion, that is, Memory that isn’t used for long gets aged out first. This is in contrast to the large pool, where memory is managed in a heap (using allocate and free).

Check Shared Pool Size :

select name, bytes/1024/1024 "MB" from v$sgastat where pool = 'shared pool'

order by bytes desc;

Refence Link :

1-Buffer Cache

2-Shared Pool

Use RMAN to Manage Oracle Files / DataFiles

Report of database schema for database with db_unique_name ORCL

List of Permanent Datafiles

===========================

File Size(MB) Tablespace RB segs Datafile Name

—- ——– ——————– ——- ————————

1 750 SYSTEM *** /u01/app/oracle/oradata/ORCL/system01.dbf

2 1150 SYSAUX *** /u01/app/oracle/oradata/ORCL/sysaux01.dbf

3 444 UNDOTBS1 *** /u01/app/oracle/oradata/ORCL/undotbs01.dbf

4 120 USERS *** /u01/app/oracle/oradata/ORCL/users01.dbf

5 345 EXAMPLE *** /u01/app/oracle/oradata/ORCL/example01.dbf

8 3277 SOE *** /u01/app/oracle/product/11.2.0.2/db_1/dbs/soe.dbf

List of Temporary Files

=======================

File Size(MB) Tablespace Maxsize(MB) Tempfile Name

—- ——– ——————– ———– ——————–

1 370 TEMP 32767 /u01/app/oracle/oradata/ORCL/temp01.dbf

RMAN>

Copy the file(s) to the new location.

RMAN> COPY DATAFILE 8 TO '/u01/app/oracle/oradata/ORCL/soe.dbf';

Turn the tablespace to offline. We could have turned the tablespace offline before the copy, removing the need for a recovery, but the tablespace would have been offline longer using that method.

RMAN> SQL 'ALTER TABLESPACE osama OFFLINE';

Switch to the new datafile copy(s) and recover the tablespace.

RMAN> SWITCH DATAFILE 8 TO COPY;

RMAN> RECOVER TABLESPACE osama;

Remove the old datafile(s).

Done .

Osama Mustafa

Manage Oracle Files / DataFiles Part 3

SQL> SELECT name FROM v$datafile;

NAME

———————————————————

C:\ORACLE\PRODUCT\11.2.0\ORADATA\ORCL\SYSTEM01.DBF

C:\ORACLE\PRODUCT\11.2.0\ORADATA\ORCL\UNDOTBS01.DBF

C:\ORACLE\PRODUCT\11.2.0\ORADATA\ORCL\SYSAUX01.DBF

C:\ORACLE\PRODUCT\11.2.0\ORADATA\ORCL\USERS01.DBF

4 rows selected.

SQL>

SQL> SHUTDOWN IMMEDIATE

Database closed.

Database dismounted.

ORACLE instance shut down.

You can rename Datafiles , Move it now .

SQL> STARTUP MOUNT

ORACLE instance started.

Total System Global Area 167772160 bytes

Fixed Size 787968 bytes

Variable Size 61864448 bytes

Database Buffers 104857600 bytes

Redo Buffers 262144 bytes

Database mounted.

SQL> ALTER DATABASE RENAME FILE ‘C:\ORACLE\PRODUCT\11.2.0\ORADATA\ORCL\USERS01.DBF’ –

> TO ‘C:\ORACLE\PRODUCT\11.2.0\ORADATA\ORCL\RENAME_USERS01.DBF’;

Database altered.

SQL> ALTER DATABASE OPEN;

Database altered.

SQL>

Done

Enjoy

Osama mustafa

ORA-12557: TNS:protocol adapter not loadable

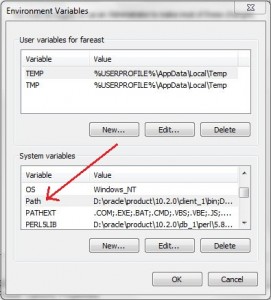

and it must be related to Windows Environment or Oracle Home PATH because sqlplus command works smoothly when I execute it inside ORACLE_HOME\bin.

RUN: SYSDM.CPL to open Windows System Properties

Click on Advanced Tab > Environment Variables…

Click the Path variable under System Variable, then click Edit…

change the order between Oracle Client Home and Oracle DB Home:

From: D:\oracle\product\10.2.0\client_1\bin;D:\oracle\product\10.2.0\db_1\bin;

To: D:\oracle\product\10.2.0\db_1\bin;D:\oracle\product\10.2.0\client_1\bin;

in other words, put the Oracle DB Home in front of the other path.

Or i Don’t love this way , Since its not actual solution but its solve problem sometimes :

1-Remove ORACLE_HOME From environment Variable .

2- Restart PC

Done

Osama mustafa

ORA-39152: Table exists

Cause :

Using APPEND to import the existing tables, as to not overrite them gives the following error:

Solution :

Truncating the table preserves the structure of the table for future use, so you are seeing this error message because there is a constraint or index in place.

To get around this you can use the following DataPump import parameters:

CONTENT=DATA_ONLY TABLE_EXISTS_ACTION=APPEND EXCLUDE=INDEX,CONSTRAINTThank you

Osama mustafa

Cursor in EXECUTE IMMEDIATE

syntax :

declare

SQL_Text varchar2(32760) := 'qurey'; --your query goes here

cur sys_refcursor;

begin

open cur for SQL_Text;

end;

example :

V_query := ‘Cursor statement’ ;

Link Useful :

1-Blog

2-Cursor Loop Example

Enjoy

Osama mustafa

Tablespace growth

SELECT TO_CHAR (sp.begin_interval_time,’DD-MM-YYYY’) days

, ts.tsname

, max(round((tsu.tablespace_size* dt.block_size )/(1024*1024),2) ) cur_size_MB

, max(round((tsu.tablespace_usedsize* dt.block_size )/(1024*1024),2)) usedsize_MB

FROM DBA_HIST_TBSPC_SPACE_USAGE tsu

, DBA_HIST_TABLESPACE_STAT ts

, DBA_HIST_SNAPSHOT sp

, DBA_TABLESPACES dt

WHERE tsu.tablespace_id= ts.ts#

AND tsu.snap_id = sp.snap_id

AND ts.tsname = dt.tablespace_name

AND ts.tsname NOT IN (‘SYSAUX’,’SYSTEM’)

GROUP BY TO_CHAR (sp.begin_interval_time,’DD-MM-YYYY’), ts.tsname

ORDER BY ts.tsname, days;

column “Percent of Total Disk Usage” justify right format 999.99

column “Space Used (MB)” justify right format 9,999,999.99

column “Total Object Size (MB)” justify right format 9,999,999.99

set linesize 150

set pages 80

set feedback off

set line 5000

column “SEGMENT_NAME” justify left format A30

column “TABLESPACE_NAME” justify left format A30

select * from (select c.TABLESPACE_NAME,c.segment_name,to_char(end_interval_time, ‘MM/DD/YY’) mydate, sum(space_used_delta) / 1024 / 1024 “Space used (MB)”, avg(c.bytes) / 1024 / 1024 “Total Object Size (MB)”,

round(sum(space_used_delta) / sum(c.bytes) * 100, 2) “Percent of Total Disk Usage”

from

dba_hist_snapshot sn,

dba_hist_seg_stat a,

dba_objects b,

dba_segments c

where begin_interval_time > trunc(sysdate) – 10

and sn.snap_id = a.snap_id

and b.object_id = a.obj#

and b.owner = c.owner

and b.object_name = c.segment_name

and c.segment_name = ‘S_PARTY’

group by c.TABLESPACE_NAME,c.segment_name,to_char(end_interval_time, ‘MM/DD/YY’)

order by c.TABLESPACE_NAME,c.segment_name,to_date(mydate, ‘MM/DD/YY’));

set pages 80

set feedback off

column “OBJECT_NAME” justify left format A30

column “SUBOBJECT_NAME” justify left format A30

column “OBJECT_TYPE” justify left format A30

column “Tablespace Name” justify left format A30

set line 5000

SELECT o.OWNER , o.OBJECT_NAME , o.SUBOBJECT_NAME , o.OBJECT_TYPE ,

t.NAME “Tablespace Name”, s.growth/(1024*1024) “Growth in MB”,

(SELECT sum(bytes)/(1024*1024)

FROM dba_segments

WHERE segment_name=o.object_name) “Total Size(MB)”

FROM DBA_OBJECTS o,

( SELECT TS#,OBJ#,

SUM(SPACE_USED_DELTA) growth

FROM DBA_HIST_SEG_STAT

GROUP BY TS#,OBJ#

HAVING SUM(SPACE_USED_DELTA) > 0

ORDER BY 2 DESC ) s,

v$tablespace t

WHERE s.OBJ# = o.OBJECT_ID

AND s.TS#=t.TS#

AND o.OWNER=’SIEBEL’

ORDER BY 6 DESC

/

set feedback on

select * from (select c.TABLESPACE_NAME,c.segment_name “Object Name”,b.object_type,

sum(space_used_delta) / 1024 / 1024 “Growth (MB)”

from dba_hist_snapshot sn,

dba_hist_seg_stat a,

dba_objects b,

dba_segments c

where begin_interval_time > trunc(sysdate) – &days_back

and sn.snap_id = a.snap_id

and b.object_id = a.obj#

and b.owner = c.owner

and b.object_name = c.segment_name

and c.owner =’SIEBEL’

group by c.TABLESPACE_NAME,c.segment_name,b.object_type)

order by 3 asc;

Thank you

UTL_MAIL Or send email from Oracle Database

–>

$ORACLE_HOME/rdbms/admin/utlmail.sql

$ORACLE_HOME/rdbms/admin/utlsmtp.sql

$ORACLE_HOME/rdbms/admin/prvtmail.plb

SQL> GRANT EXECUTE ON utl_smtp TO PUBLIC;

alter system set smtp_out_server = ‘SMTP_SERVER_IP_ADDRESS:SMTP_PORT’ scope=both;

eg: alter system set smtp_out_server = ’172.25.90.165:25′ scope=both;