You need to know the difference between

- Role.

- Rolebinding.

- ClusterRole.

Please refer the Kubernetes documentation here

A Role always sets permissions within a particular namespace; when you create a Role, you have to specify the namespace it belongs in.

ClusterRole, by contrast, is a non-namespaced resource. The resources have different names (Role and ClusterRole) because a Kubernetes object always has to be either namespaced or not namespaced; it can’t be both.

A rolebinding is namespace scoped and clusterrolebinding is cluster scoped i.e across all namespace.

ClusterRoles and ClusterRoleBindings are useful in the following cases:

- Give permissions for non-namespaced resources like nodes

- Give permissions for resources in all the namespaces of a cluster

- Give permissions for non-resource endpoints like /healthz

A RoleBinding can also reference a ClusterRole to grant the permissions defined in that ClusterRole to resources inside the RoleBinding’s namespace. This kind of reference lets you define a set of common roles across your cluster, then reuse them within multiple namespaces.

example

Create a Role for the dev User

- Create a role spec file role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

namespace: beebox-mobile

name: pod-reader

rules:

- apiGroups: [""]

resources: ["pods", "pods/log"]

verbs: ["get", "watch", "list"]2. Save and exit the file by pressing Escape followed by :wq.

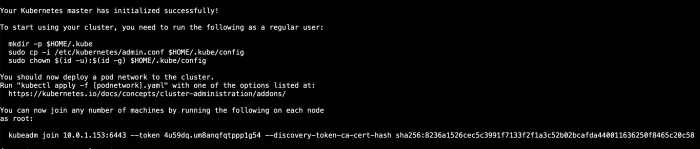

3. apply the role.

kubectl apply -f file-name.yml

Bind the Role to the dev User and Verify Your Setup Works

- Create the RoleBinding spec file:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: pod-reader

namespace: beebox-mobile

subjects:

- kind: User

name: dev

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: pod-reader

apiGroup: rbac.authorization.k8s.io2. Apply the role, by running

kubectl apply -f file-name.yml

Cheers

Osama