The script below will allow you to list all VCNs in OCI and delete all attached resources to the COMPARTMENT_OCID.

Note: I wrote the scripts to perform the tasks mentioned below, which can be updated and expanded based on the needs. Feel free to do that and say the source

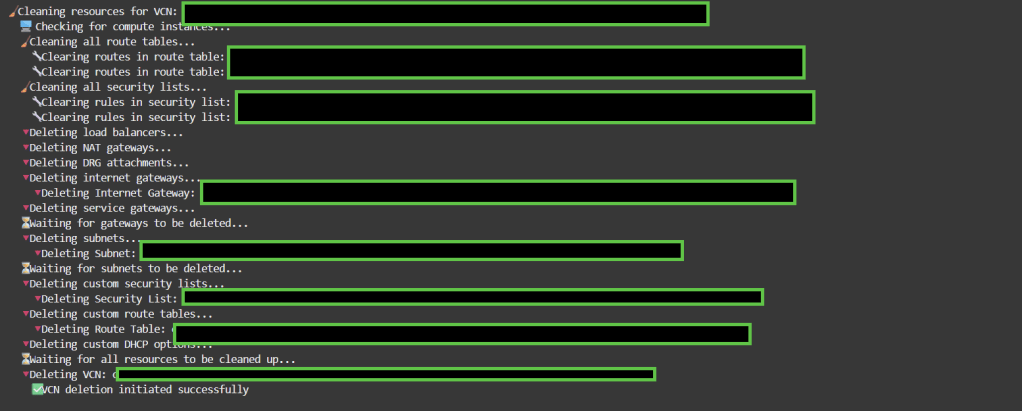

Complete Resource Deletion Chain: The script now handles the proper order of deletion:

- Compute instances first

- Clean route tables and security lists

- Load balancers

- Gateways (NAT, Internet, Service, DRG attachments)

- Subnets

- Custom security lists, route tables, and DHCP options

- Finally, the VCN itself

#!/bin/bash

# ✅ Set this to the target compartment OCID

COMPARTMENT_OCID="Set Your OCID Here"

# (Optional) Force region

export OCI_CLI_REGION=me-jeddah-1

echo "📍 Region: $OCI_CLI_REGION"

echo "📦 Compartment: $COMPARTMENT_OCID"

echo "⚠️ WARNING: This will delete ALL VCNs and related resources in the compartment!"

echo "Press Ctrl+C within 10 seconds to cancel..."

sleep 10

# Function to wait for resource deletion

wait_for_deletion() {

local resource_id=$1

local resource_type=$2

local max_attempts=30

local attempt=1

echo " ⏳ Waiting for $resource_type deletion..."

while [ $attempt -le $max_attempts ]; do

if ! oci network $resource_type get --${resource_type//-/}-id "$resource_id" &>/dev/null; then

echo " ✅ $resource_type deleted successfully"

return 0

fi

sleep 10

((attempt++))

done

echo " ⚠️ Timeout waiting for $resource_type deletion"

return 1

}

# Function to check if resource is default

is_default_resource() {

local resource_id=$1

local resource_type=$2

case $resource_type in

"security-list")

result=$(oci network security-list get --security-list-id "$resource_id" --query "data.\"display-name\"" --raw-output 2>/dev/null)

[[ "$result" == "Default Security List"* ]]

;;

"route-table")

result=$(oci network route-table get --rt-id "$resource_id" --query "data.\"display-name\"" --raw-output 2>/dev/null)

[[ "$result" == "Default Route Table"* ]]

;;

"dhcp-options")

result=$(oci network dhcp-options get --dhcp-id "$resource_id" --query "data.\"display-name\"" --raw-output 2>/dev/null)

[[ "$result" == "Default DHCP Options"* ]]

;;

*)

false

;;

esac

}

# Function to clean all route tables in a VCN

clean_all_route_tables() {

local VCN_ID=$1

echo " 🧹 Cleaning all route tables..."

local RT_IDS=$(oci network route-table list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for RT_ID in $RT_IDS; do

if [ -n "$RT_ID" ]; then

echo " 🔧 Clearing routes in route table: $RT_ID"

oci network route-table update --rt-id "$RT_ID" --route-rules '[]' --force &>/dev/null || true

fi

done

# Wait a bit for route updates to propagate

sleep 5

}

# Function to clean all security lists in a VCN

clean_all_security_lists() {

local VCN_ID=$1

echo " 🧹 Cleaning all security lists..."

local SL_IDS=$(oci network security-list list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for SL_ID in $SL_IDS; do

if [ -n "$SL_ID" ]; then

echo " 🔧 Clearing rules in security list: $SL_ID"

oci network security-list update \

--security-list-id "$SL_ID" \

--egress-security-rules '[]' \

--ingress-security-rules '[]' \

--force &>/dev/null || true

fi

done

# Wait a bit for security list updates to propagate

sleep 5

}

# Function to delete compute instances in subnets

delete_compute_instances() {

local VCN_ID=$1

echo " 🖥️ Checking for compute instances..."

local INSTANCES=$(oci compute instance list \

--compartment-id "$COMPARTMENT_OCID" \

--query "data[?\"lifecycle-state\" != 'TERMINATED'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for INSTANCE_ID in $INSTANCES; do

if [ -n "$INSTANCE_ID" ]; then

# Check if instance is in this VCN

local INSTANCE_VCN=$(oci compute instance list-vnics \

--instance-id "$INSTANCE_ID" \

--query "data[0].\"vcn-id\"" \

--raw-output 2>/dev/null)

if [[ "$INSTANCE_VCN" == "$VCN_ID" ]]; then

echo " 🔻 Terminating compute instance: $INSTANCE_ID"

oci compute instance terminate --instance-id "$INSTANCE_ID" --force &>/dev/null || true

fi

fi

done

}

# Main cleanup function for a single VCN

cleanup_vcn() {

local VCN_ID=$1

echo -e "\n🧹 Cleaning resources for VCN: $VCN_ID"

# Step 1: Delete compute instances first

delete_compute_instances "$VCN_ID"

# Step 2: Clean route tables and security lists

clean_all_route_tables "$VCN_ID"

clean_all_security_lists "$VCN_ID"

# Step 3: Delete Load Balancers

echo " 🔻 Deleting load balancers..."

local LBS=$(oci lb load-balancer list \

--compartment-id "$COMPARTMENT_OCID" \

--query "data[?\"lifecycle-state\" == 'ACTIVE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for LB_ID in $LBS; do

if [ -n "$LB_ID" ]; then

echo " 🔻 Deleting Load Balancer: $LB_ID"

oci lb load-balancer delete --load-balancer-id "$LB_ID" --force &>/dev/null || true

fi

done

# Step 4: Delete NAT Gateways

echo " 🔻 Deleting NAT gateways..."

local NAT_GWS=$(oci network nat-gateway list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for NAT_ID in $NAT_GWS; do

if [ -n "$NAT_ID" ]; then

echo " 🔻 Deleting NAT Gateway: $NAT_ID"

oci network nat-gateway delete --nat-gateway-id "$NAT_ID" --force &>/dev/null || true

fi

done

# Step 5: Delete DRG Attachments

echo " 🔻 Deleting DRG attachments..."

local DRG_ATTACHMENTS=$(oci network drg-attachment list \

--compartment-id "$COMPARTMENT_OCID" \

--query "data[?\"vcn-id\" == '$VCN_ID' && \"lifecycle-state\" == 'ATTACHED'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for DRG_ATTACHMENT_ID in $DRG_ATTACHMENTS; do

if [ -n "$DRG_ATTACHMENT_ID" ]; then

echo " 🔻 Deleting DRG Attachment: $DRG_ATTACHMENT_ID"

oci network drg-attachment delete --drg-attachment-id "$DRG_ATTACHMENT_ID" --force &>/dev/null || true

fi

done

# Step 6: Delete Internet Gateways

echo " 🔻 Deleting internet gateways..."

local IGWS=$(oci network internet-gateway list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for IGW_ID in $IGWS; do

if [ -n "$IGW_ID" ]; then

echo " 🔻 Deleting Internet Gateway: $IGW_ID"

oci network internet-gateway delete --ig-id "$IGW_ID" --force &>/dev/null || true

fi

done

# Step 7: Delete Service Gateways

echo " 🔻 Deleting service gateways..."

local SGWS=$(oci network service-gateway list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for SGW_ID in $SGWS; do

if [ -n "$SGW_ID" ]; then

echo " 🔻 Deleting Service Gateway: $SGW_ID"

oci network service-gateway delete --service-gateway-id "$SGW_ID" --force &>/dev/null || true

fi

done

# Step 8: Wait for gateways to be deleted

echo " ⏳ Waiting for gateways to be deleted..."

sleep 30

# Step 9: Delete Subnets

echo " 🔻 Deleting subnets..."

local SUBNETS=$(oci network subnet list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for SUBNET_ID in $SUBNETS; do

if [ -n "$SUBNET_ID" ]; then

echo " 🔻 Deleting Subnet: $SUBNET_ID"

oci network subnet delete --subnet-id "$SUBNET_ID" --force &>/dev/null || true

fi

done

# Step 10: Wait for subnets to be deleted

echo " ⏳ Waiting for subnets to be deleted..."

sleep 30

# Step 11: Delete non-default Security Lists

echo " 🔻 Deleting custom security lists..."

local SL_IDS=$(oci network security-list list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for SL_ID in $SL_IDS; do

if [ -n "$SL_ID" ] && ! is_default_resource "$SL_ID" "security-list"; then

echo " 🔻 Deleting Security List: $SL_ID"

oci network security-list delete --security-list-id "$SL_ID" --force &>/dev/null || true

fi

done

# Step 12: Delete non-default Route Tables

echo " 🔻 Deleting custom route tables..."

local RT_IDS=$(oci network route-table list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for RT_ID in $RT_IDS; do

if [ -n "$RT_ID" ] && ! is_default_resource "$RT_ID" "route-table"; then

echo " 🔻 Deleting Route Table: $RT_ID"

oci network route-table delete --rt-id "$RT_ID" --force &>/dev/null || true

fi

done

# Step 13: Delete non-default DHCP Options

echo " 🔻 Deleting custom DHCP options..."

local DHCP_IDS=$(oci network dhcp-options list \

--compartment-id "$COMPARTMENT_OCID" \

--vcn-id "$VCN_ID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

for DHCP_ID in $DHCP_IDS; do

if [ -n "$DHCP_ID" ] && ! is_default_resource "$DHCP_ID" "dhcp-options"; then

echo " 🔻 Deleting DHCP Options: $DHCP_ID"

oci network dhcp-options delete --dhcp-id "$DHCP_ID" --force &>/dev/null || true

fi

done

# Step 14: Wait before attempting VCN deletion

echo " ⏳ Waiting for all resources to be cleaned up..."

sleep 60

# Step 15: Finally, delete the VCN

echo " 🔻 Deleting VCN: $VCN_ID"

local max_attempts=5

local attempt=1

while [ $attempt -le $max_attempts ]; do

if oci network vcn delete --vcn-id "$VCN_ID" --force &>/dev/null; then

echo " ✅ VCN deletion initiated successfully"

break

else

echo " ⚠️ VCN deletion attempt $attempt failed, retrying in 30 seconds..."

sleep 30

((attempt++))

fi

done

if [ $attempt -gt $max_attempts ]; then

echo " ❌ Failed to delete VCN after $max_attempts attempts"

echo " 💡 You may need to manually check for remaining dependencies"

fi

}

# Main execution

echo -e "\n🚀 Starting VCN cleanup process..."

# Fetch all VCNs in the compartment

VCN_IDS=$(oci network vcn list \

--compartment-id "$COMPARTMENT_OCID" \

--query "data[?\"lifecycle-state\" == 'AVAILABLE'].id" \

--raw-output 2>/dev/null | jq -r '.[]' 2>/dev/null)

if [ -z "$VCN_IDS" ]; then

echo "📭 No VCNs found in compartment $COMPARTMENT_OCID"

exit 0

fi

echo "📋 Found VCNs to delete:"

for VCN_ID in $VCN_IDS; do

VCN_NAME=$(oci network vcn get --vcn-id "$VCN_ID" --query "data.\"display-name\"" --raw-output 2>/dev/null)

echo " - $VCN_NAME ($VCN_ID)"

done

# Process each VCN

for VCN_ID in $VCN_IDS; do

if [ -n "$VCN_ID" ]; then

cleanup_vcn "$VCN_ID"

fi

done

echo -e "\n✅ Cleanup complete for compartment: $COMPARTMENT_OCID"

echo "🔍 You may want to verify in the OCI Console that all resources have been deleted."Output example

Regards